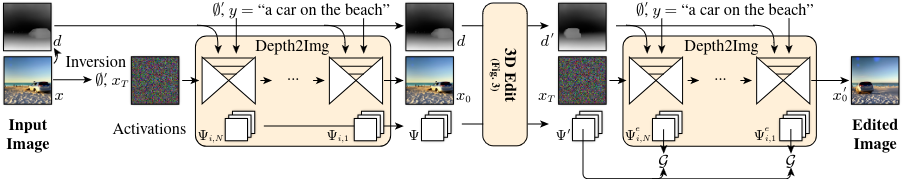

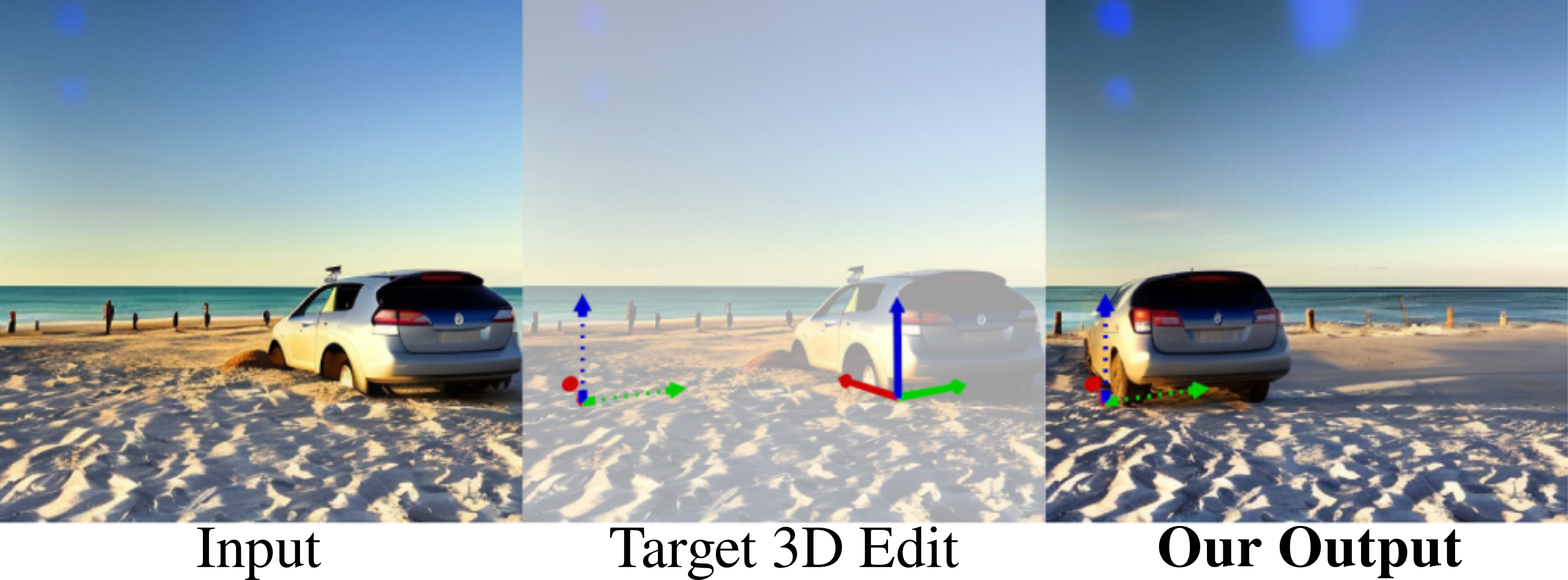

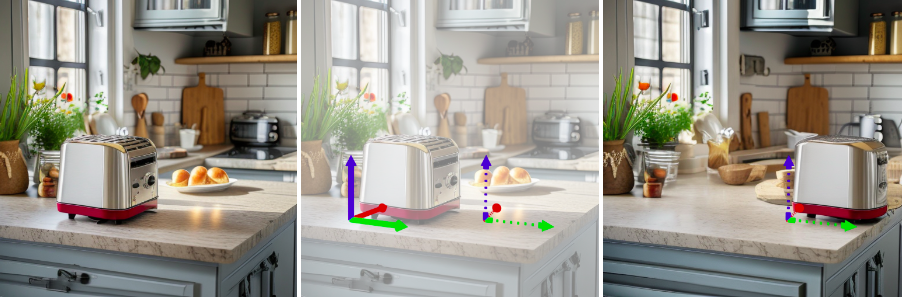

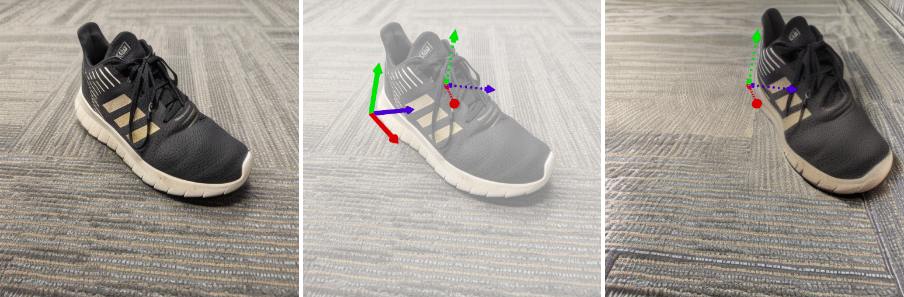

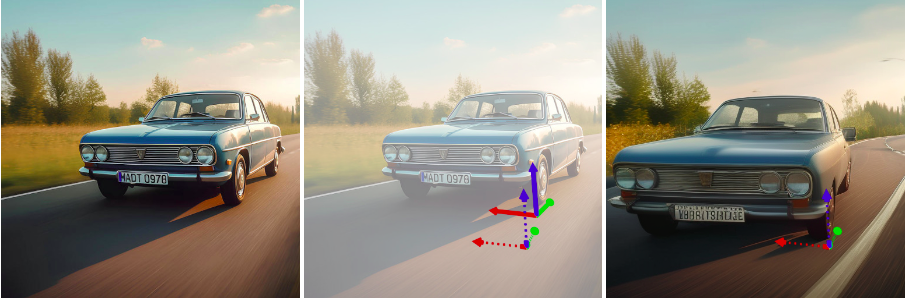

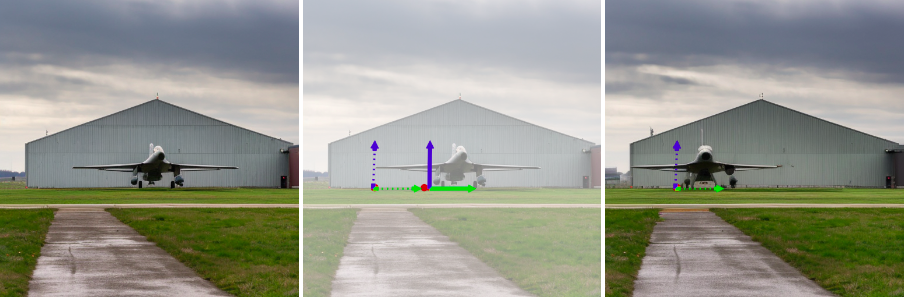

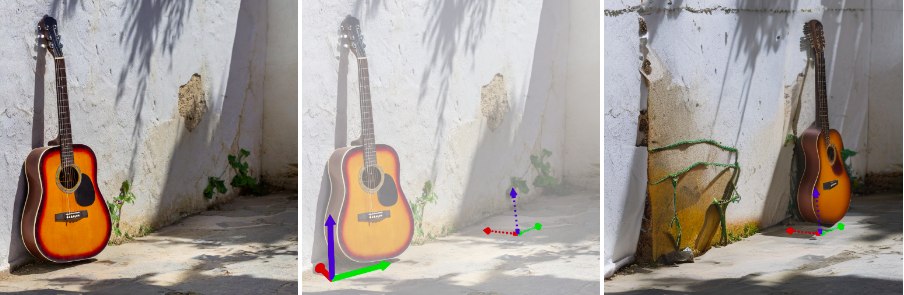

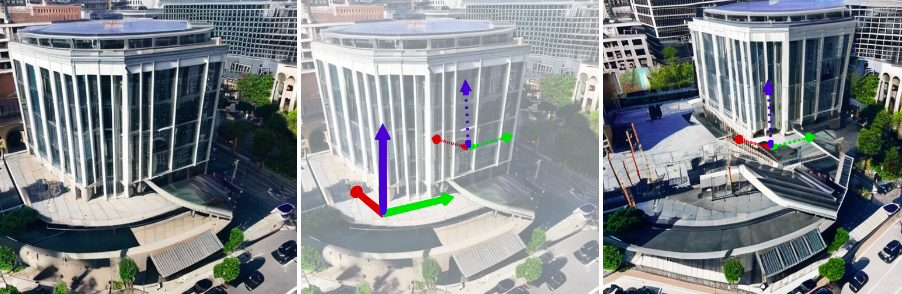

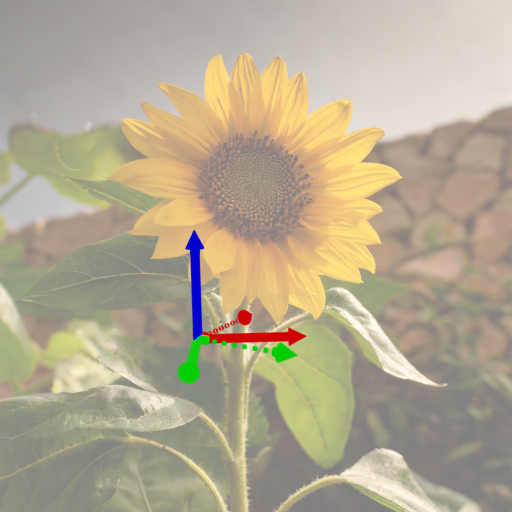

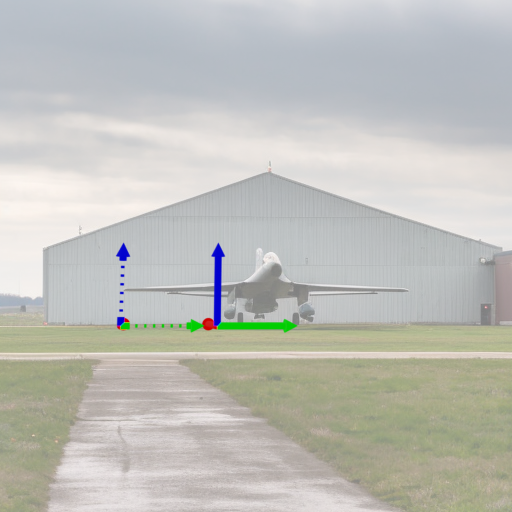

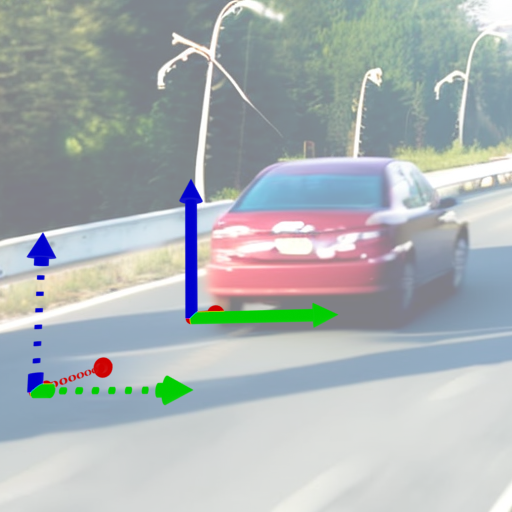

Diffusion Handles is a novel approach to enabling 3D object edits on diffusion images. We accomplish these edits using existing pre-trained diffusion models, and 2D image depth estimation, without any fine-tuning or 3D object retrieval. The edited results remain plausible, photo-real, and preserve object identity.

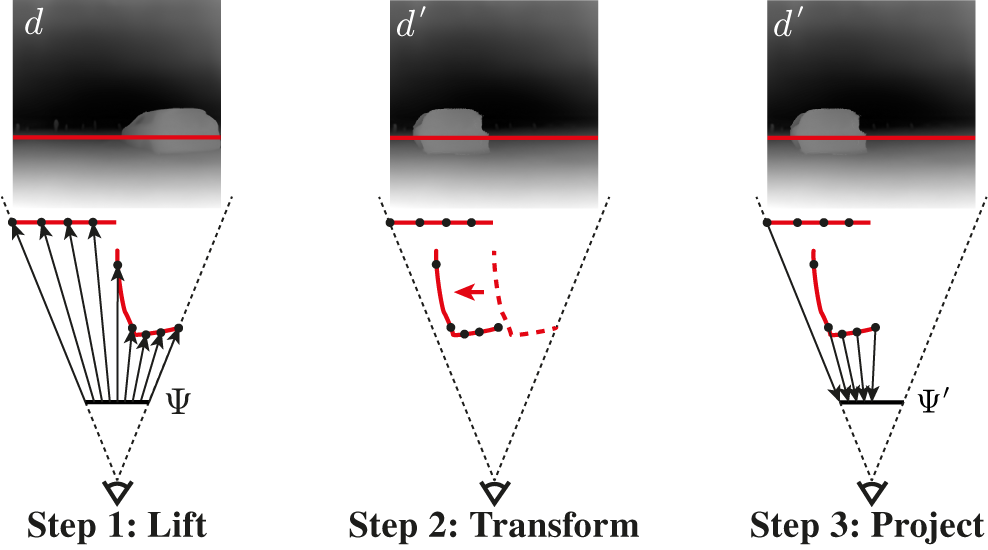

Diffusion Handles addresses a critically missing facet of generative image based creative design, and significantly advance the state-of-the-art in generative image editing. Our key insight is to lift diffusion activations for an object to 3D using a proxy depth, 3D-transform the depth and associated activations, and project them back to image space. The diffusion process applied to the manipulated activations with identity control, produces plausible edited images showing complex 3D occlusion and lighting effects.We evaluate Diffusion Handles: quantitatively, on a large synthetic data benchmark; and qualitatively by a user study, showing our output to be more plausible, and better than prior art at both, 3D editing and identity control.

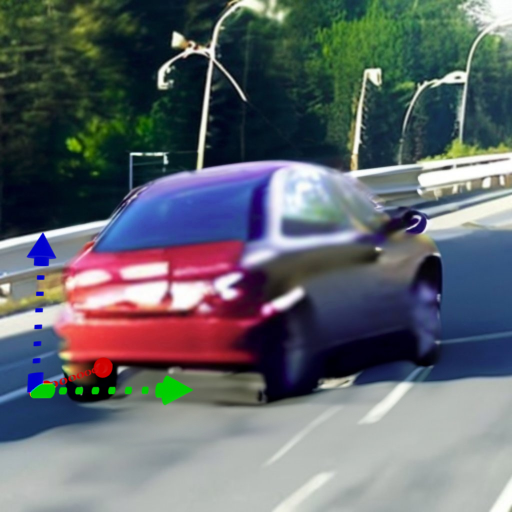

| Input | Target Edit | Ours | Obj. Stitch | Zero123 | 3DIT |

|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(Comparison to the state-of-the-art on a much larger set of images are available here for images with estimated depth & synthetic depth.)

ObjectStitch is a generative object compositing method which we adapt for object-level editing on scenes.

Zero-1-2-3 is a diffusion-based novel-view synthesis method which we use in conjunction with inpainting to perform object-level 3D-aware image editing.

Object 3DIT is a language-guided model for 3D-aware image editing.

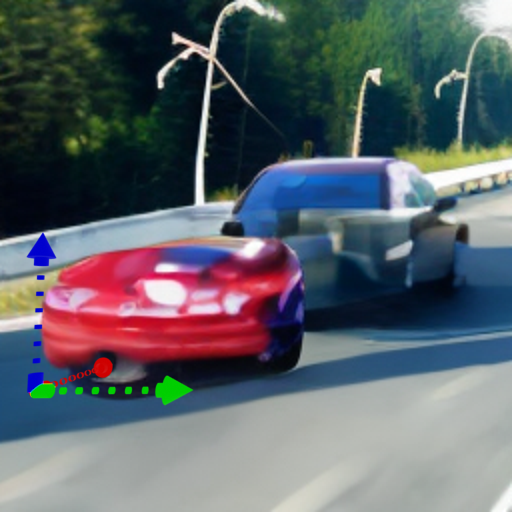

Start Frame

End Frame

Start Frame

End Frame